API Overview

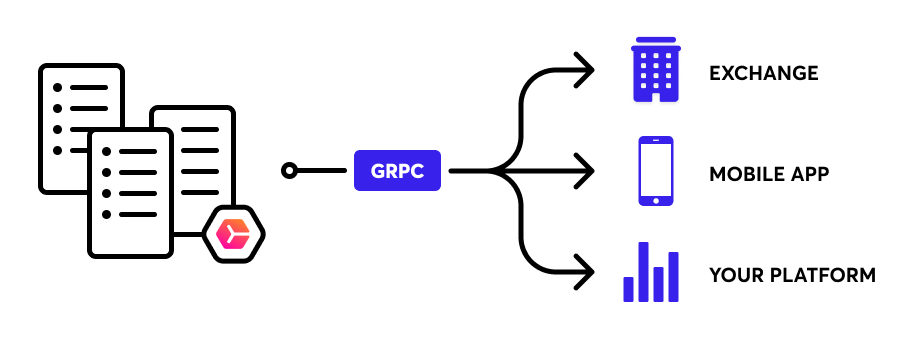

The CryptoMood API provides you real-time streams and RPC methods for market sentiment and data requests. We are using Google Protocol buffers with GRPC.

This documentation should contain all informations and descriptions of used models/messages with basic usage in supported languages. Every method or request is also described with sample codes and examples. For extensive examples, visit our public repository.

If you are not planing to develop your own API calls, feel free to use our fully compliant client libraries below.

Client libraries

Examples

Go examples

- Connection

- Historic articles request

- New articles subscription

- Historic news sentiment request

- Historic social sentiment request

- News sentiment subscription

- Social sentiment subscription

Python examples

- Connection

- Historic articles request

- New articles subscription

- Historic news sentiment request

- Historic social sentiment request

- News sentiment subscription

- Social sentiment subscription

Python extras

Nodejs examples

- Connection

- Historic articles request

- New articles subscription

- Historic news sentiment request

- Historic social sentiment request

- News sentiment subscription

- Social sentiment subscription

Prerequirements

# --------------------------------

# Instructions for Python

# --------------------------------

# Make sure you have Python 3.4 or higher

# Ensure you have `pip` version 9.0.1 or higher:

python -m pip install --upgrade pip

# Install gRPC, gRPC tools

python -m pip install grpcio grpcio-tools

# transpile proto file to `*.py` files with

python -m grpc_tools.protoc -I./ --python_out=. --grpc_python_out=. ./types.proto

# This will generate transpiled files into current directory `types_pb2.py` and 'types_pb2_grpc'

# --------------------------------

# Instructions for GoLang

# --------------------------------

GIT_TAG="v1.2.0" # change as needed

go get -d -u github.com/golang/protobuf/protoc-gen-go

git -C "$(go env GOPATH)"/src/github.com/golang/protobuf checkout $GIT_TAG

go install github.com/golang/protobuf/protoc-gen-go

# --------------------------------

# Instructions for NodeJS

# --------------------------------

# Follow https://www.npmjs.com/package/google-protobuf

Protocol Buffers (a.k.a., protobuf) are Google's language-neutral, platform-neutral, extensible mechanism for serializing structured data. You can find protobuf's documentation on the Google Developers site.

This doc contains protobuf installation instructions. To install protobuf, you need to install the protocol compiler (used to compile .proto files) and the protobuf runtime for your chosen programming language.

For more info, follow instructions on Google's protocol buffer website

CryptoMood protobuf introduction

As mentioned above, we provide API calls via protocol buffers in two forms - stream and RPC call

# RPC call

rpc SayHello(HelloRequest) returns (HelloResponse){

}

- Unary RPCs where the client sends a single request to the server and gets a single response back, just like a normal function call.

# Streaming

rpc LotsOfReplies(HelloRequest) returns (stream HelloResponse){

}

- Server streaming RPCs where the client sends a request to the server and gets a stream to read a sequence of messages back. The client reads from the returned stream until there are no more messages. gRPC guarantees message ordering within an individual RPC call.

Connection & Authentication

// Load the protobuffer definitions

const proto = grpc.loadPackageDefinition(

protoLoader.loadSync("types.proto", {

keepCase: true,

longs: String,

enums: String,

defaults: true,

oneofs: true

})

);

// Initialize the MessagesProxy service. You have to provide valid host address and valid path to .pem file

const client = new proto.MessagesProxy(

SERVER,

grpc.credentials.createSsl(fs.readFileSync('./cert.pem'))

);

# Create credencials and join channel. You have to provide valid path to .pem file and valid host address.

creds = grpc.ssl_channel_credentials(open("./cert.pem", 'rb').read())

channel = grpc.secure_channel(SERVER_ADDRESS, creds)

// install protoc [https://github.com/golang/protobuf]

// and protoc-gen-go [https://github.com/golang/protobuf/tree/master/protoc-gen-go]

// transpile proto file to `*.go` file with

// `protoc -I .. -I $GOPATH/src --go_out=plugins=grpc:./ ../types.proto`

// This will generate transpiled file into current directory.

// To adhere golang conventions, move it to dir named ie. types.

// Load credentials

creds, err := credentials.NewClientTLSFromFile("./cert.pem", "")

// Dial the server

conn, err := grpc.Dial(Server, grpc.WithTransportCredentials(creds),

grpc.WithTimeout(5 * time.Second), grpc.WithBlock())

Each client can generate token to authorize RPC calls on gRPC server

located at apiv1.cryptomood.com:443.

All clients have to use x.509 certificate to ensure secured connection.

You can download the certificate here

After the successful connection you can use subscribtions as stated in sections below

# Initialize required service and call required method (in this case subscription)

stub = types_pb2_grpc.MessagesProxyStub(channel)

stream = stub.SubscribeArticle(empty_pb2.Empty())

# Read data indefinitely

for entry in stream:

print(entry)

// Subscribe to required stream and listen to incoming data

let channel = client.SubscribeArticle();

channel.on("data", function(message) {

console.log(message);

});

// Initialize required service and call required method (in this case subscription)

proxyClient := types.NewMessagesProxyClient(conn)

sub, err := proxyClient.Subscribearticle(context.Background(), &empty.Empty{})

// Read data indefinitely

for {

msg, err := sub.Recv()

if err == io.EOF {

continue

}

if sub.Context().Err() != nil {

_ = sub.CloseSend()

fmt.Println("Closing connection to server")

break

}

fmt.Println(msg.Base.Content, err)

}

Protocol buffers make it easy to implement your custom libraries. You can think of protobuf file as a file with definitions for our api.

Each supported language has tools to use this definitions, ie. node.js can directly load *.proto file and use its api. With python, we recommend class generation from this file with the help of grpc tool.

In this documentation we are providing basic examples which you can use with little to no effort.

Data calls

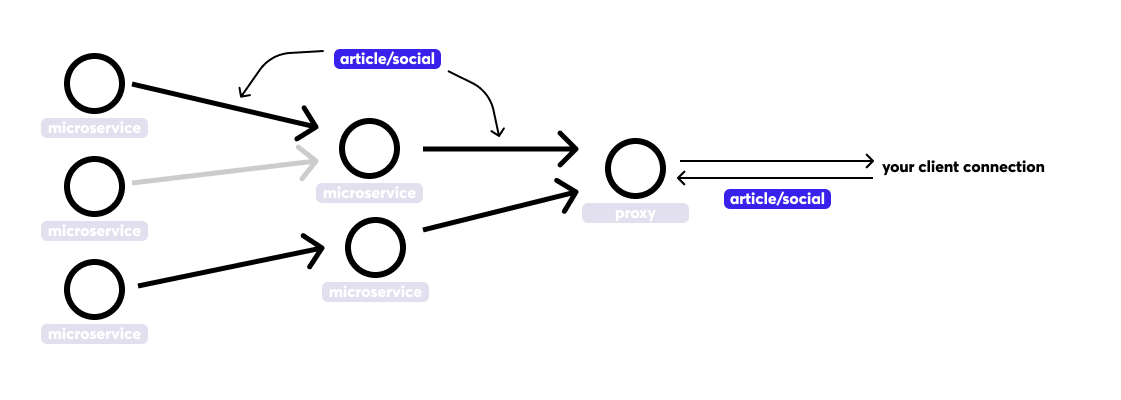

Our internal microservices communicate with each other using gRPC technology. The exchange piece of informations between these services are called data models. Those represents entities, which are processed (analyzed) and distributed.

We differ between them based on where they come from:

- Social networks -

social - News websites -

article - Videos (currently only YouTube) -

video - Messaging platforms (Telegram, etc.) -

user_message

Article

Requesting old articles

const client = new proto.HistoricData(

SERVER,

grpc.credentials.createSsl(fs.readFileSync(CERT_FILE_PATH))

);

client.HistoricArticles({ from: { seconds: 1561400800}, to: { seconds: 1561428800}}, function(err, req) {

console.log(req)

});

# Example: https://github.com/cryptomood/api/blob/master/python/HistoricData/HistoricArticles/client.py

historic_request_kwargs = {'from': from_time, 'to': to_time}

req = types_pb2.HistoricRequest(**historic_request_kwargs)

article_items = stub.HistoricArticles(req)

for article in article_items.items:

print(article.base.id, article.base.content)

historicClient := types.NewHistoricDataClient(conn)

historicRequest := &types.HistoricRequest{From: ×tamp.Timestamp{Seconds: 1561400800},

To: ×tamp.Timestamp{Seconds: 1561428800}}

sub, err := historicClient.HistoricArticles(context.Background(), historicRequest)

if err != nil {

panic(err)

}

fmt.Println(len(sub.Items))

Subscribing to articles stream

const client = new proto.MessagesProxy(

SERVER,

grpc.credentials.createSsl(fs.readFileSync('./cert.pem'))

);

let channel = client.SubscribeArticle();

channel.on("data", function(message) {

console.log(message);

});

stub = types_pb2_grpc.MessagesProxyStub(channel)

article_stream = stub.SubscribeArticle(empty_pb2.Empty())

for msg in article_stream:

print(msg)

proxyClient := types.NewMessagesProxyClient(conn)

sub, _ := proxyClient.SubscribeArticle(context.Background(), &empty.Empty{})

for {

msg, _ := sub.Recv()

fmt.Println(msg)

}

Articles are data models representing blog posts, articles and other pieces of information which apears on article websites. You can request old articles using RPC call or subscribe to incoming new articles.

Basic model for News, articles. It's weight depends on Alexa ranks.

| Field | Type | Label | Description |

|---|---|---|---|

| base | BaseModel | ||

| sentiment | SentimentModel | ||

| named_entities | NamedEntitiesModel | named entities from content | |

| title_data | NamedEntitiesModel | named entities from title |

Dataset

Service for requesting data from dataset

| Method Name | Request Type | Response Type | Description |

|---|---|---|---|

| Assets | google.protobuf.Empty | AssetItems | returns all supported assets |

MessagesProxy service

Service for entries streaming

| Method Name | Request Type | Response Type | Description |

|---|---|---|---|

| SubscribeBaseArticle | AssetsFilter | PublicModel stream | |

| SubscribeArticle | AssetsFilter | Article stream |

Sentiments service

Sentiment message holds informations about aggregated sentiment for spcecific time window (M1,H1) It is emitted after each time windows closes or is updated (for specific asset or resolution). If your application needs to receive sentiment updates for only one specific asset, it needs to be filtered on your side.

Sentiment candles are divided to two basic groups - news sentiment and social sentiment. Their payload is the same.

| Method Name | Request Type | Response Type | Description |

|---|---|---|---|

| HistoricSocialSentiment | SentimentHistoricRequest | AggregationCandle stream | |

| HistoricNewsSentiment | SentimentHistoricRequest | AggregationCandle stream | |

| SubscribeSocialSentiment | AggregationCandleFilter | AggregationCandle stream | |

| SubscribeNewsSentiment | AggregationCandleFilter | AggregationCandle stream |

Subscribing to sentiment stream

const client = new proto.Sentiments(

SERVER,

grpc.credentials.createSsl(fs.readFileSync('./cert.pem'))

);

let channel = client.SubscribeNewsSentiment({ resolution: 'M1', assets_filter: { assets: ['BTC']}});

channel.on("data", function(message) {

console.log(message);

});

stub = types_pb2_grpc.SentimentsStub(channel)

sentiment_stream_request_kwargs = {'resolution': 'M1', 'assets_filter': { 'assets': ['BTC']}}

sentiment_stream = stub.SubscribeNewsSentiment(**sentiment_stream_request_kwargs)

for sentiment in sentiment_stream:

print(sentiment)

proxyClient := types.NewSentimentsClient(conn)

sub, _ := proxyClient.SubscribeNewsSentiment(context.Background(), &types.AggregationCandleFilter{

Resolution: "M1",

AssetsFilter: &types.AssetsFilter{

Assets: []string{"BTC"},

},

})

for {

msg, _ := sub.Recv()

fmt.Println(msg)

}

AggregationCandle

Candle message holds informations about aggregated sentiment for specific time window. It is emitted for each changed symbol. If your application needs to receive sentiment updates for each asset, it needs to be subscribed repeatedly from your side.

| Field | Type | Label | Description |

|---|---|---|---|

| id | AggId | used for constructing time-based keys | |

| asset | string | ||

| resolution | string | ||

| pv | int64 | counter for positive items | |

| nv | int64 | counter for negative items | |

| ps | double | positive sentiment sum | |

| ns | double | negative sentiment sum | |

| a | double | aggregated value |

AggId

| Field | Type | Label | Description |

|---|---|---|---|

| year | int32 | ||

| month | int32 | ||

| day | int32 | ||

| hour | int32 | ||

| minute | int32 |

AggregationCandleFilter

| Field | Type | Label | Description |

|---|---|---|---|

| resolution | string | resolution for candle - M1/H1 | |

| assets_filter | AssetsFilter |

HistoricData

Service for requesting historic data

| Method Name | Request Type | Response Type | Description |

|---|---|---|---|

| HistoricBaseArticles | HistoricRequest | PublicModel stream | |

| HistoricArticles | HistoricRequest | Article stream |

Requesting historic articles`s

const client = new proto.HistoricData(

SERVER,

grpc.credentials.createSsl(fs.readFileSync('./cert.pem'))

);

client.HistoricArticles({ from: { seconds: 1546300800}, to: { seconds: 1546300800}}, res => { console.log(res)})

historic_request_kwargs = {'from': from_time, 'to': to_time}

req = types_pb2.HistoricRequest(**historic_request_kwargs)

article_items = stub.HistoricArticles(req)

historicStub := types.NewHistoricDataClient(conn)

historicRequest := &types.HistoricRequest{From: ×tamp.Timestamp{Seconds: 1546300800}, To: ×tamp.Timestamp{Seconds: 1546300800}}

response := historicStub.HistoricArticles(context.Background(), historicRequest)

Requesting historic articles

const client = new proto.HistoricData(

SERVER,

grpc.credentials.createSsl(fs.readFileSync('./cert.pem'))

);

client.HistoricArticles({ from: { seconds: 1546300800}, to: { seconds: 1546300800}}, res => { console.log(res)})

historic_request_kwargs = {'from': from_time, 'to': to_time}

req = types_pb2.HistoricRequest(**historic_request_kwargs)

article_items = stub.HistoricArticles(req)

historicStub := types.NewHistoricDataClient(conn)

historicRequest := &types.HistoricRequest{From: ×tamp.Timestamp{Seconds: 1546300800}, To: ×tamp.Timestamp{Seconds: 1546300800}}

response := historicStub.HistoricArticles(context.Background(), historicRequest)

Service for requesting historic data. Each request requires (at least) specification of the time window.

HistoricRequest

Request for entries.

| Field | Type | Label | Description |

|---|---|---|---|

| from | google.protobuf.Timestamp | unix timestamp for start - included in results (greater or equal) | |

| to | google.protobuf.Timestamp | unix timestamp for end - excluded from results ( | |

| filter | AssetsFilter |

Additional

Common objects

Asset

represent one asset

| Field | Type | Label | Description |

|---|---|---|---|

| name | string | ||

| symbol | string | symbol (can be duplicated) | |

| code | string | unique field |

AssetItems

| Field | Type | Label | Description |

|---|---|---|---|

| assets | Asset | repeated |

AssetsFilter

| Field | Type | Label | Description |

|---|---|---|---|

| assets | string | repeated | name of the asset - ie. BTC |

| all_assets | bool |

BaseModel

Base model for messages or news, contains basic data like title, content, source, published date etc..

| Field | Type | Label | Description |

|---|---|---|---|

| id | string | unique identifier with schema | |

| title | string | title of article | |

| content | string | full content stripped of unnecessary characters(js, html tags...) | |

| crawler | string | ||

| pub_date | google.protobuf.Timestamp | timestamp representing the datetime, when the article has been published | |

| created | google.protobuf.Timestamp | timestamp representing acquisition datetime | |

| source | string | url of article | |

| excerpt | string | summary provided by the domain | |

| videos | string | repeated | list of video sources |

| images | string | repeated | list of image sources |

| links | string | repeated | list of off-page hyperlinks |

| author | string | author of article | |

| lang | string | identified language | |

| weight | double | importance of the article's creator |

PublicModel

| Field | Type | Label | Description |

|---|---|---|---|

| id | string | unique identifier with schema | |

| title | string | title of article | |

| content | string | full content stripped of unnecessary characters(js, html tags...) | |

| pub_date | google.protobuf.Timestamp | timestamp representing the datetime, when the article has been published | |

| created | google.protobuf.Timestamp | timestamp representing acquisition datetime | |

| source | string | url of article | |

| excerpt | string | summary provided by the domain | |

| videos | string | repeated | list of video sources |

| images | string | repeated | list of image sources |

| links | string | repeated | list of off-page hyperlinks |

| domain | string | ||

| extensions | PublicModelExtensions | additional fields, for now available only 'assets' |

PublicModelExtensions

additional fields to public model

| Field | Type | Label | Description |

|---|---|---|---|

| assets | string | repeated | assets found in publication |

UserMessage

Basic model for media where the messages are wrote by regular user

| Field | Type | Label | Description |

|---|---|---|---|

| base | BaseModel | ||

| sentiment | SentimentModel | ||

| named_entities | NamedEntitiesModel | ||

| user | string | nickname of user | |

| message | string | text of message |

SentimentModel

Group data that refers to sentiment of message

| Field | Type | Label | Description |

|---|---|---|---|

| sentiment | double | analyzed sentiment <-1, 1> | |

| market_impact | double | analyzed impact in the respective area |

NamedEntitiesModel

Groups all types of named entities we support.

| Field | Type | Label | Description |

|---|---|---|---|

| symbols | string | repeated | list of crypto assets |

| assets | NamedEntityOccurrence | repeated | recognized cryptocurrencies |

| persons | NamedEntityOccurrence | repeated | recognized persons |

| companies | NamedEntityOccurrence | repeated | recognized companies |

| organizations | NamedEntityOccurrence | repeated | recognized organizations |

| locations | NamedEntityOccurrence | repeated | recognized locations |

| exchanges | NamedEntityOccurrence | repeated | recognized exchanges |

| misc | NamedEntityOccurrence | repeated | recognized misc objects |

| tags | string | repeated | list of assigned tags |

| asset_mentions | NamedEntitiesModel.AssetMentionsEntry | repeated | mapped asset to its mention count |

| source_text | string | cleaned text which uses NER |

NamedEntity

Types of named entities

| Name | Number | Description |

|---|---|---|

| ASSET_ENTITY | 0 | |

| PERSON_ENTITY | 1 | |

| LOCATION_ENTITY | 2 | |

| COMPANY_ENTITY | 3 | |

| EXCHANGE_ENTITY | 4 | |

| MISC_ENTITY | 5 | |

| ORGANIZATION_ENTITY | 6 |

NamedEntitiesModel.AssetMentionsEntry

| Field | Type | Label | Description |

|---|---|---|---|

| key | string | asset | |

| value | int32 | count |

NamedEntityOccurrence

Occurrence od named entity. contains position, matched text, category

| Field | Type | Label | Description |

|---|---|---|---|

| label | NamedEntity | Represents NamedEntity element | |

| start | uint32 | Start position of occurrence | |

| end | uint32 | End position of occurrence | |

| text | string | Matched text |

Transaction

Stores useful information about transaction.

| Field | Type | Label | Description |

|---|---|---|---|

| id | string | ||

| hash | string | ||

| from_address | string | ||

| to_address | string | ||

| from_owner | string | ||

| to_owner | string | ||

| time | google.protobuf.Timestamp | ||

| comment | string | ||

| asset | string | ||

| size | double | ||

| USD_size | double |

Scalar Value Types

google.protobuf types

Following types are so called extension/plugins provided by google. We are listing them here as reference.

| Field | Type | Label | Description |

|---|---|---|---|

| Timestamp | google.protobuf.Timestamp | universal timestamp type | |

| Empty | google.protobuf.Empty | empty message |